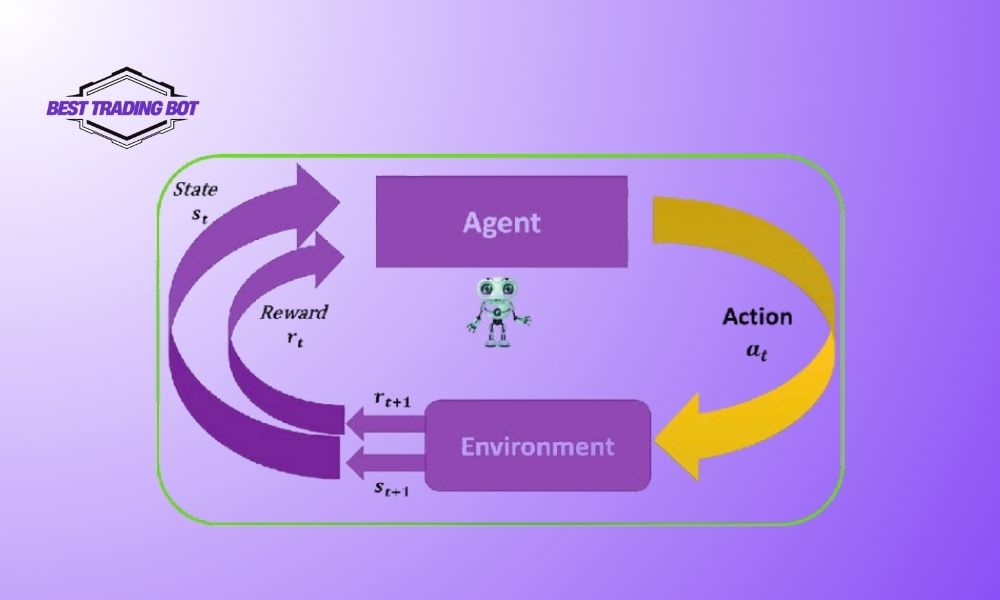

How does an AI agent interacts with its environment is clearly illustrated through a continuous cycle: the agent observes the environment’s current state and receives a reward, then performs an action. The environment transitions to a new state and provides a new reward, repeating this loop for learning and optimization, enabling intelligent adaptation over time.

Contents

Core components of the interaction cycle

The image visualizes a dynamic loop where how does an AI agent interacts with its environment is demonstrated through specific steps:

Environment:

This is the context in which the agent operates. The environment provides information to the agent and reacts to the agent’s actions.

- Provides State (s_t): At each time step ‘t’, the environment presents the agent with a “state” (s_t). This state is a description of the current situation of the environment that the agent can perceive. For example, in a game, the state could be the positions of characters on the screen. In a self-driving car, the state could be data from cameras and Lidar about surrounding vehicles and obstacles.

- Provides Reward (r_t): After the agent has taken an action in the previous step (or at the beginning), the environment provides a “reward” signal (r_t). This reward indicates how good or bad the previous action (leading to state s_t) was for the agent’s goal. Rewards can be positive (encouragement) or negative (punishment).

Agent:

This is the AI entity that makes decisions and performs actions.

- Observation (Perception): The agent perceives the “State (s_t)” and “Reward (r_t)” from the environment through its sensors (implicitly understood as how the agent receives s_t and r_t in this RL diagram, though not explicitly labeled “sensors”).

- Decision-Making (Policy): Based on the current state (s_t) and the objective of maximizing cumulative reward over time, the agent uses its “policy” to select an “Action (a_t)”. This policy can be a function, a lookup table, or a complex neural network, mapping states to actions. This is the “brain” of the agent. In reinforcement learning, this policy is gradually improved through interactions. Initially, the agent might act randomly, but by receiving rewards, it learns which actions lead to better outcomes in specific states.

- Executes Action (a_t): The agent executes the chosen “Action (a_t)” upon the environment through its actuators (e.g., moving a game piece, accelerating a car, displaying a response).

Interaction and Learning Loop:

- After the agent performs “Action (a_t)”, the environment transitions to a “New State (s_t+1)” and provides a “New Reward (r_t+1)”.

- The agent then observes this new state and new reward, and selects a new action.

- This cycle repeats. With each iteration, especially in reinforcement learning, the agent adjusts its policy to select actions that lead to higher future rewards. This is core to how does an AI agent interacts with its environment to learn and improve.

Connecting to the traditional PEAS framework

Although the image focuses on the reinforcement learning model, we can still connect it to the broader PEAS (Performance measure, Environment, Actuators, Sensors) framework:

Performance Measure: In the context of the image, the primary performance measure is the total “Reward” the agent accumulates over time. The agent strives to maximize this value.

Environment: Clearly represented in the image, providing “State” and “Reward”, and receiving “Action”.

Actuators: These are how the agent performs its “Action (a_t)” on the environment.

Sensors: These are how the agent perceives the “State (s_t)” and “Reward (r_t)” from the environment.

The nature of State, Action, and Reward

- State: Can be simple (e.g., room temperature) or very complex (e.g., all pixel data from a self-driving car’s camera). Effective state representation is crucial.

- Action: Can be discrete (e.g., move left, move right) or continuous (e.g., the steering angle of a wheel).

- Reward: Designing the reward function is an art. It must accurately reflect the agent’s goals. Sparse rewards (received only at the end of a task) can make learning much harder than dense rewards (received frequently).

Importance of this model

This interaction model based on state, action, and reward is central to many breakthroughs in AI, including:

- Robots learning to walk or perform complex manipulations.

- AI playing games at superhuman levels (e.g., AlphaGo, Atari game-playing agents).

- Recommendation systems optimizing content for users.

- Resource management in complex systems.

Understanding how does an AI agent interacts with its environment according to this model allows us to design increasingly intelligent agents capable of autonomous learning and adaptation in dynamic and uncertain settings.

In summary, an AI agent interacts with its environment through a loop of perceiving state and reward, taking action, and observing outcomes to learn and adapt. To explore more AI insights and fun tech content, be sure to follow Meme Sniper Bot for engaging updates!